- Published on

Typescript-conforming outputs with Ollama

- Authors

- Name

- Jack Youstra

- @jackyoustra

Author's Note: Hey! I'm moving on to AI work for some time, so the blog's going to be a bit more focused on that (assuming I have time or interesting things to write about). I'll still be doing some iOS work, but expect a higher frequency of AI-related posts. Because of that, the blog will talk about things that have already existed online for a bit and be a bit less technically minded. Let me know what you think!

Introduction

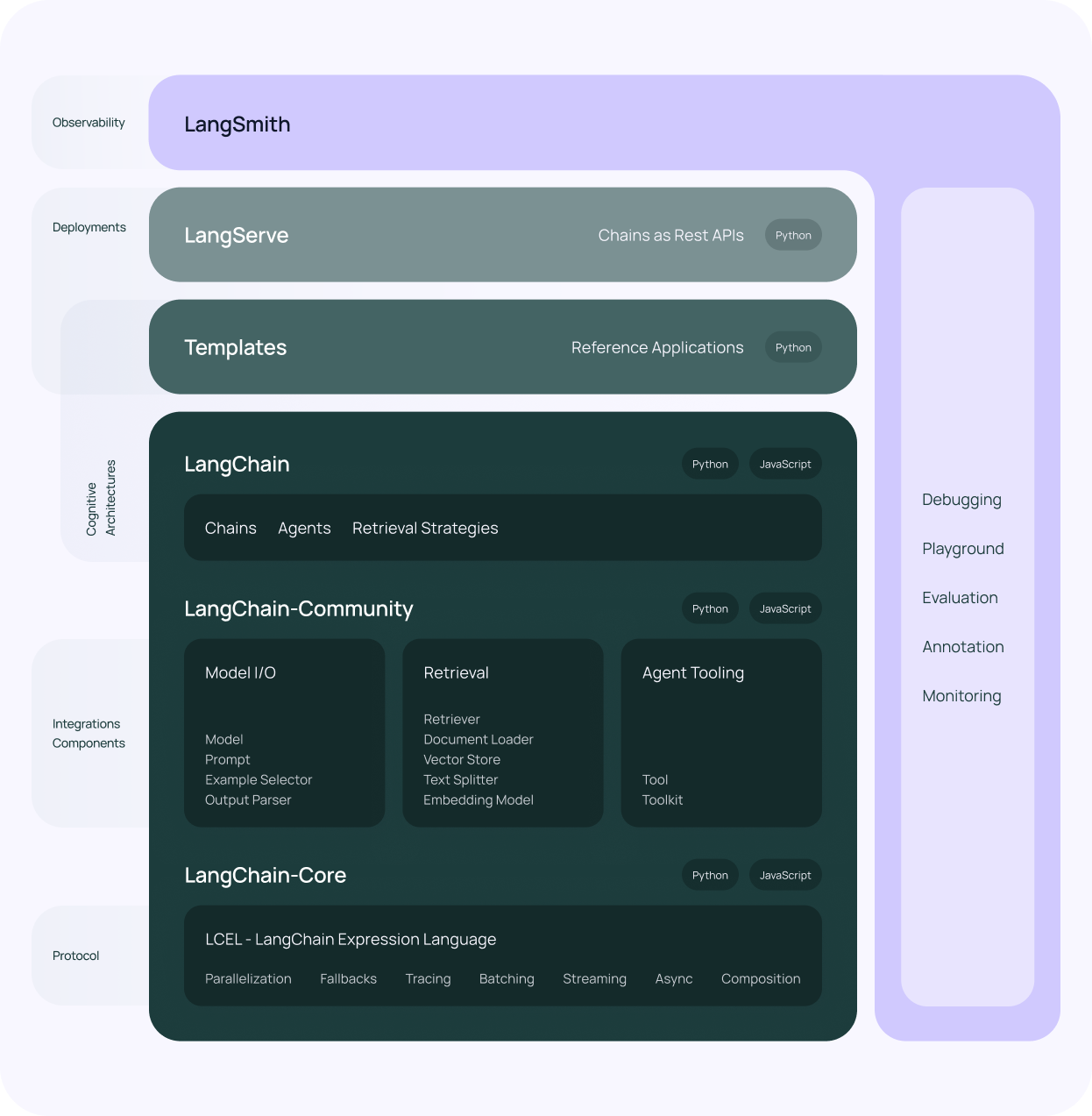

Generated content is becoming a pretty large part of the web in both freeform text and images. It's pretty limited in technical circles, though, because it has a frustrating tendency to be rather unconstrained in its output. Current solutions deal with this with chains of model communication either handrolled or facilitated by platforms such as LangChain:

The key point to realize about LangChain is that it's a chain only for LLMs. Once you finish your preprocessing, you have very weak guarantees about the output of or any intermediate state in the model, even if you apply pretty rigorous training and postprocessing steps.

🦙🔧💻🔁? No!

Finetuning doesn't help here, as language models are very delicate, regardless of whether an adversary (or an unfortunate user) has a whitebox or a blackbox. If you want to be able to put in classic algorithmic programs in the middle of an LLM and expect it to work, you need to introduce types that you can guarantee the model will respect. No amount of prompt engineering or finetuning will help you here.

Right now, most services allow you to output JSON, but that's not enough. You need to be able to specify the types of the JSON you want to output, and you need to be able to do so in a way that the model can understand. You can do that now with llama.cpp (and, thus, Ollama), sglang1, and with huggingface.2 Today, I'll show you how to do that with Ollama.

Background

Theory - Types

Suppose for our application we wanted to mock a ton of people and their ages, as generated by an LLM. We'd want the output to conform to a type: a Person type, with a name field that is a string and an age field that is a number. Here's how you can express this in TypeScript, a commonly-used (and imo very good) superset of JavaScript that allows you to specify types.

type Person = {

name: string;

age: number;

}

This type encodes all of the information we need and nothing more. Hence, any valid algorithm to constrain the LLM output must at least have this type somewhere in it. With this in mind, lets look at how an LLM works so we can understand how we can use types with it.

Theory - LLMs

One of my favorite go-to guides on how an LLM works is this 3D model of an LLM. If you go to this model and click on the "softmax" section, you'll see the softmax logits of the LLM. Feel free to read it if you want to understand how LLMs work better. These are the odds that the LLM assigns to each token in the vocabulary for the next token in the sequence (tokens are sampled one-at-a-time). The LLM then samples from these logits to get the next token, with the sampling process being controlled by the temperature parameter.

The key point to realize is that the LLM is a probabilistic model. It doesn't output a single value, but rather a distribution over the possible values. Generally speaking, the decision is an easy one: just pick the best or second-best token. Having a distribution gives us a very large advantage if we want to constrain the output of the LLM to a specific type. We just add one more step to the process after the logits are generated and eliminate all tokens that don't conform to the type we want at the given step.

...Unfortunately, we have a typescript type, and it's very not obvious how to turn that into something that can say whether or not the next token is valid. Fortunately, there are a plethora of tools to turn a typescript type into a different form called GBNF which does model a token-level validator.

Theory - GBNF

authoritative source: the GBNF readme

GBNF is a type of grammar that is used to specify the syntax of a programming language. Unlike typescript, which specifies the structure of a data type, GBNF specifies the structure of a language. The difference is that GBNF, given a string, has all the information necessary to determine the full set of allowed tokens for the next possible token in the sequence.

For our Person type, the GBNF would look something like this:

age-kv ::= "\"age\"" space ":" space number

char ::= [^"\\] | "\\" (["\\/bfnrt] | "u" [0-9a-fA-F] [0-9a-fA-F] [0-9a-fA-F] [0-9a-fA-F])

decimal-part ::= [0-9] ([0-9] ([0-9] ([0-9] ([0-9] ([0-9] ([0-9] ([0-9] ([0-9] ([0-9] ([0-9] ([0-9] ([0-9] ([0-9] ([0-9] ([0-9])?)?)?)?)?)?)?)?)?)?)?)?)?)?)?

integral-part ::= [0-9] | [1-9] ([0-9] ([0-9] ([0-9] ([0-9] ([0-9] ([0-9] ([0-9] ([0-9] ([0-9] ([0-9] ([0-9] ([0-9] ([0-9] ([0-9] ([0-9])?)?)?)?)?)?)?)?)?)?)?)?)?)?)?

name-kv ::= "\"name\"" space ":" space string

number ::= ("-"? integral-part) ("." decimal-part)? ([eE] [-+]? integral-part)? space

root ::= "{" space name-kv "," space age-kv "}" space

space ::= " "?

string ::= "\"" char* "\"" space

So, bringing it all together: we can convert a typescript type into a GBNF grammar, and then use that grammar to validate the next token in the sequence.

Implementation

Step 1: Convert TypeScript to GBNF

Llama.cpp has a tool called ts-type-to-grammar.sh which implements this whole pipeline. Just clone and run!

Step 2: Use the GBNF to validate the next token

Doing this with Ollama is a lil tricky because the PRs for grammar haven't been merged. Either wait a bit for it to be merged or clone it, rebase the branch on main, and build from source with the grammar PR.

After that, take your GBNF from step one and pass it into an Ollama request:

import requests

response = requests.post('http://localhost:11434/api/generate', json={

"model": "phi3:14b-medium-128k-instruct-q6_K", // or whatever you want

"prompt": prompt,

"options": {

"num_ctx": 1024 * 128

},

"grammar": gbnfspec,

"stream": False

})

And that's it! You now have a way to generate content that conforms to a specific type. Enjoy!